ARC Centre of Excellence for Automated Decision-Making & Society

I'm a Chief Investigator of the ADM+S. Responsible, ethical, and inclusive automation.

I'm a Chief Investigator of the ADM+S. Responsible, ethical, and inclusive automation.

Our research centre at QUT: world-leading communication, media, and law research for a flourishing digital society.

My book is free to download!

I'm a founding board member. We're making Facebook and Instagram accountable for human rights.

Our research: What works to address inequality online?

I helped draft the principles on transparency and accountability in content moderation, informed by our research on user perceptions of content moderation

Research: Only a tiny proportion of Airbnb listings in popular tourist destinations are clearly labelled with accessibility features.

Profile by Michael Koziol, Sydney Morning Herald; image by Attila Csaszar.

by Steven Levy @ WIRED, 8 Nov 2022

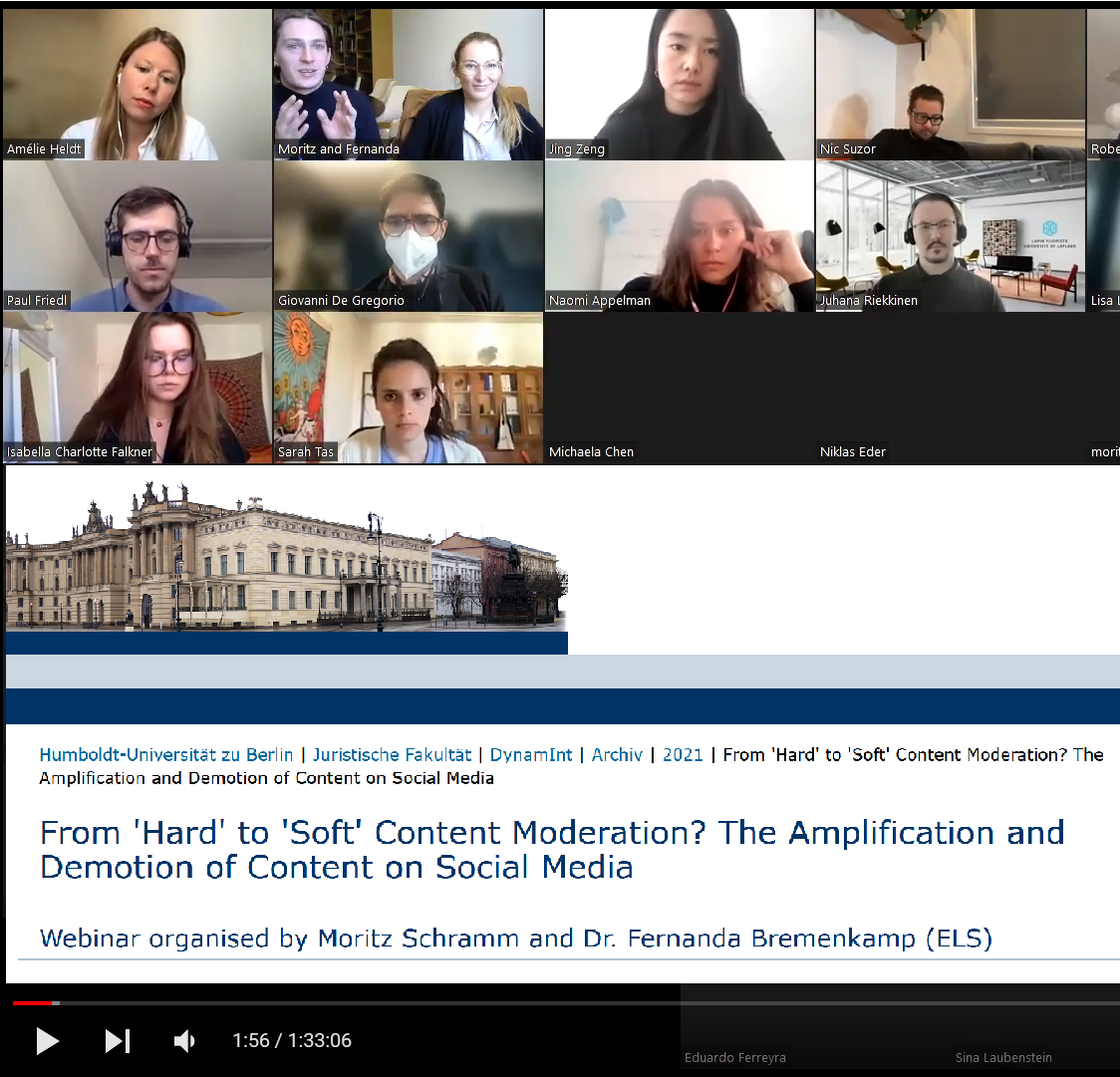

The Amplification and Demotion of Content on Social Media Webinar organised by Moritz Schramm and Dr. Fernanda Bremenkamp (ELS)

Plenary session at Internet Governance Forum 2023 in Kyoto: 'Evolving Trends in Mis- & Dis-Information' (8 October 2023)

I am unable to help with Facebook or Instagram suspensions, takedowns, or other concerns by email.

I receive a lot of email and I'm just not able to respond to these enquiries.